MAP_SHARED and RSS/SHR

- 3 minutes read - 505 wordsRecently we were debugging a memory leak on an experimental debugging branch.

It turned out to be a false alarm due to adding tokio-console to a component to debug something.

However, while investigating we noticed a higher than expected RES / SHR reported by htop on another

server, that was not on the tokio console branch.

We were using shared memory / mmap queues for communicating between two processes.

These mmaps are reported in RSS (resident set size) and also in SHR (shared memory).

However, if you mmap the same file / shared memory, this will be added to the SHR even though it is a duplicate map.

Our application was re-mapping the shared memory for new client connections, and so the memory usage reported in RSS and SHR was reported as (mmap size * connections).

An easy thing to fix, but also not really a problem. The main issue is that the kernel will use resources to maintain all the maps.

To see this, here is a small example program that mmaps a 1GB file.

dd if=/dev/zero of=1GB bs=1G count=1

#include <iostream>

#include <sys/mman.h>

#include <fcntl.h>

#include <cstring>

#include <unistd.h>

using namespace std;

int main() {

int fd = open("1GB", O_RDONLY);

int value;

for (int i = 0; i < 2000; ++i) {

char *map = static_cast<char*>(

mmap(nullptr, 1024*1024*1024, PROT_READ, MAP_SHARED, fd, 0));

if (map == MAP_FAILED) {

cerr << "FAILED: " << strerror(errno) << endl;

}

// Read from each page so the memory is actually mapped

for (int i = 0; i < 1024*1024*1024; i += 4096) {

value += map[i];

}

}

for (;;) {

usleep(1000 * 1000);

}

return value;

}

Here I use O_RDONLY and PROT_READ and reading each page is enough.

Writing to each page is much slower, in which case we would use O_RDWR and PROT_READ | PROT_WRITE.

Note: If we do not actually access the page, then the usage is only reported as Virtual.

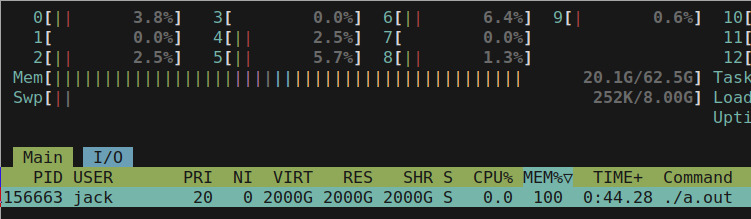

Now we can see that the Virt, RSS, and Shared are reported as 2TB.

Clearly, we have not really allocated 2TB, when the total physical RAM is 64GB.

We can see all the mappings by looking in proc:

$ cat /proc/$(pidof a.out)/maps | grep 1G | head -n 5

7df4a9000000-7df4e9000000 r--s 00000000 103:03 46409234 /home/jack/scratch/1GB

7df4e9000000-7df529000000 r--s 00000000 103:03 46409234 /home/jack/scratch/1GB

7df529000000-7df569000000 r--s 00000000 103:03 46409234 /home/jack/scratch/1GB

7df569000000-7df5a9000000 r--s 00000000 103:03 46409234 /home/jack/scratch/1GB

7df5a9000000-7df5e9000000 r--s 00000000 103:03 46409234 /home/jack/scratch/1GB

$ cat /proc/$(pidof a.out)/maps | awk '/1GB/ {print $NF}' | uniq -c

2000 /home/jack/scratch/1GB

So how can we tell the actual memory usage excluding the shared maps?

We can look at the USS (Unique Set Size) and PSS (Proportional Set Size).

Unique Set Size will show us the memory that is private to this process.

$ ps -o pid,comm,pmem,vsz,rss,pss,uss -p $(pidof a.out)

PID COMMAND %MEM VSZ RSS PSS USS

2003527 a.out 99.9 2097158112 2097153696 1048726 208

The smem utility can also be used here:

$ smem -k -P a.out

PID User Command Swap USS PSS RSS

2003527 jack ./a.out 0 208.0K 1023.7M 2.0T